5.1 KiB

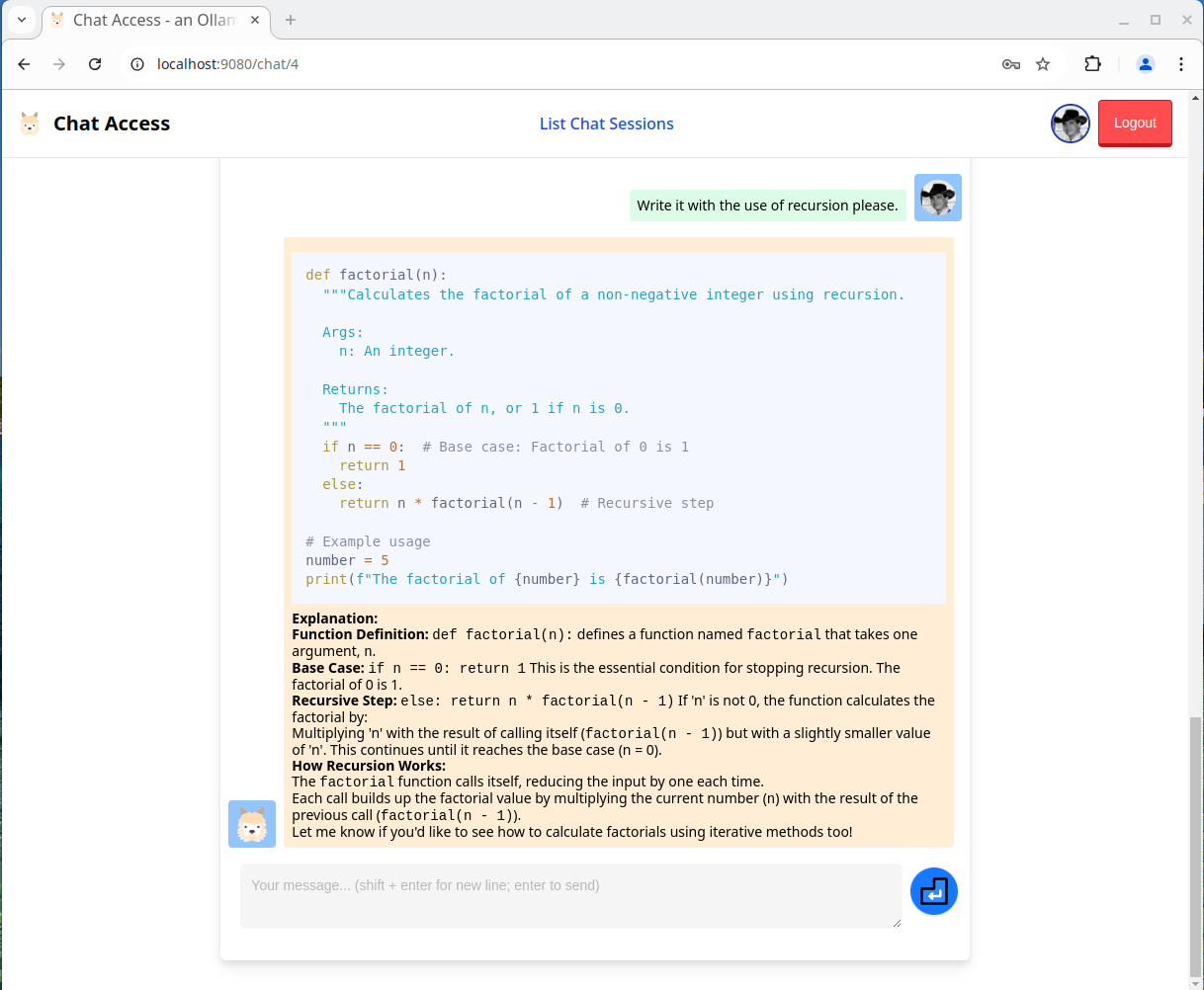

Ollama Chat Access Web UI

Table of Contents

- Requirements

- Short demo (8min video)

- How to build and run

- Accessing the Web Interface

- Using the underlying REST API service

- How to run tests

- Test coverage reports

- Design decision for the MLP (minimal lovable product)

API Service and Web interface to converse with Ollama backed Large Language models.

Requirements

- For Building and running:

- NVIDIA based GPU

- Java 17 Runtime Environment

- Recent Docker with Compose plugin

- GNU Make

- For development and running tests

- Java 17 JDK

- Node version 23 with NPM version 10

- GNU Make

Short demo (8min video)

How to build and run

There is a convenient Makefile in the root of this repository that allows to build and run dependencies, the service and frontend.

Running make without any arguments will show the usage:

$ make

Available commands:

build - builds docker images for frontend and backend

docker-build-all - the same as above

docker-build-frontend - builds docker image for frontend

docker-build-backend - builds docker image for backend

docker-run-all - runs all docker containers (requires build first)

docker-run-services - runs only docker services containers (keycloak, postgresql, ollama)

docker-stop-all - stops all docker containers

test - runs all test (on the host, not docker)

test-backend - runs backend tests

test-frontend - runs frontend tests

Pass ARGS=-d for run commands to detach.

Building docker images of the service and frontend

To build the docker images execute make docker-build-all command:

$ make docker-build-all

This will build separate, relatively size optimized, images for the backend service and the frontend:

$ docker images | grep chat-access

chat-access latest 9af844296fc4 2 hours ago 141MB

chat-access-web latest e254bbdd7d7e 2 hours ago 3.01MB

Running the solution

To run the whole solution you need to execute the make docker-run-all command:

$ make docker-run-all

By default, the docker-run-* commands do not detach so you can stop the services by pressing Ctrl+C.

Accessing the Web Interface

After running the solution the web interface will be accessible on the http://localhost:9080/ address.

The default user and password are user and user.

You can create additional users by accessing the Keycloak administrative interface available under http://localhost:7080/ address.

The admin username and password are respectively admin and admin1.

The new user needs to be created in the ChatAccess realm and for Keycloak authentication to pass needs to have email address provided and Email verified switch enabled.

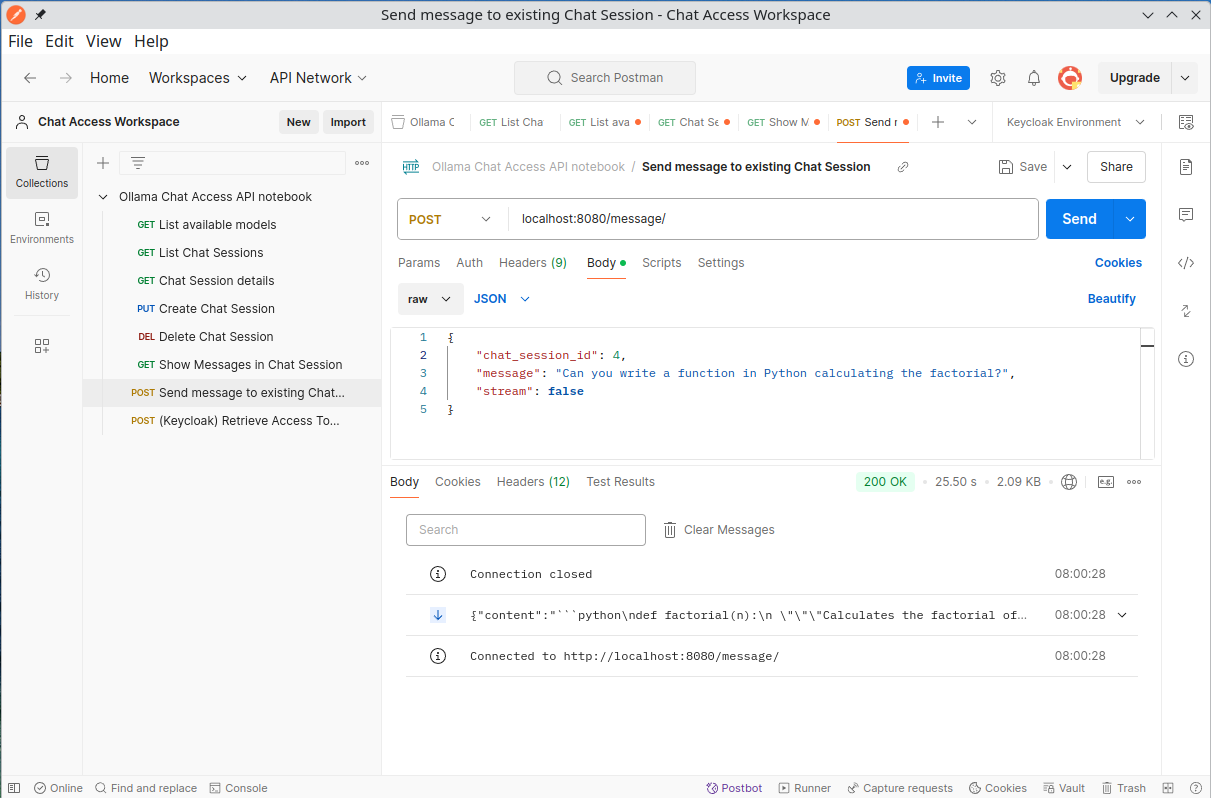

Using the underlying REST API service

There is a convenient Postman notebook available in the repository under ./postman-workspace/.

After importing to the Postman you need to get an authorization token under the Authorization tab (from time to time the token requires to be manually refreshed on the same tab).

How to run tests

Running:

make test

will run the backend and frontend tests. See requirements above as the tests are run on the host computer not inside docker containers.

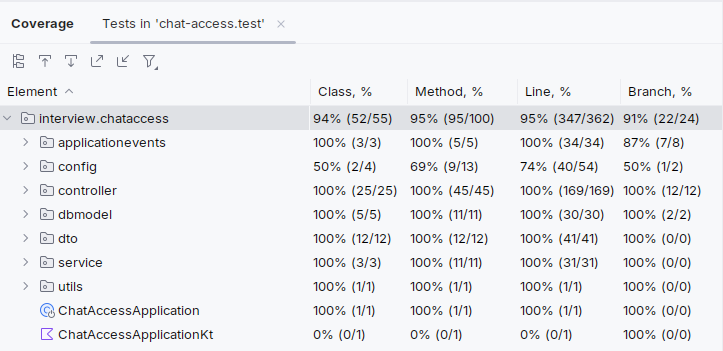

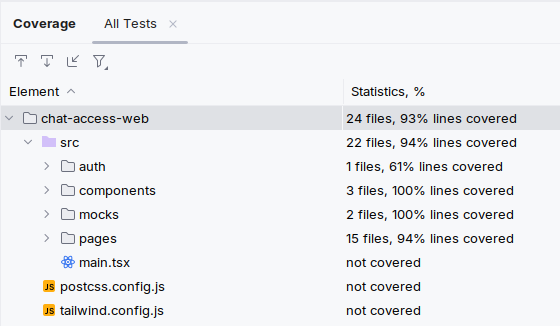

Test coverage reports

The backend service have a 95% code coverage (91% branch coverage, some configuration classes are not covered).

Frontend is at 93% of code coverage (some error conditions and smaller convenience functions like scroll to top are not covered).

Design decision for the MLP (minimal lovable product)

- No internationalization, the application is only available in English

- Users are authenticated with the use of OAuth2/OpenID-Connect via Keycloak

- Orchestration was done with Docker compose for simplicity and is aimed for demonstration purposes

- Curated list of models, held in standard spring configuration, is available as most of the larger models won't run on my computer (I'm not a gamer).

- Models from the list are pulled-in on startup. This makes the first start slow, then the models are cached in users ~/.ollama/ directory. MLP informs about the finish of this process only in application logs as it is a one-time initialization. If you see a bunch of "download stalled" messages from ollama, then the easiest workaround is to restart the ollama container to find a better mirror.